Archive for November, 2011

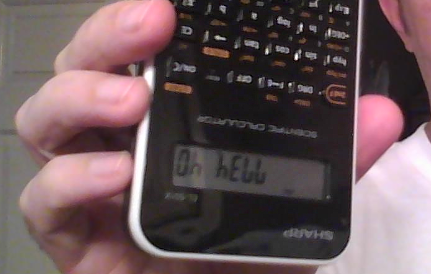

Calculation and Compilation in Arcmap

Who says a calculator can’t learn natural language ?

So whatever happened to VBA in the Field Calculator? Before 10.0 I grew accustomed to using VBA to leverage all sorts of geometry related interfaces. With 10.0 Esri has replaced VBA with Python and VBScript. I’m not sure why support for .NET wasn’t added, considering that both the C# and VB.NET compilers are part of the standard .NET Framework installation.

The .NET Framework provides modules that can compile source code and turn it into an assembly in memory. Maybe once ArcGIS supports .NET 4.0, I’ll adapt this proof-of-concept to use AvalonEdit. The Visual studio project files can be downloaded here.

public CompilerResults Compile()

{

CodeDomProvider provider = CodeDomProvider.CreateProvider(m_Language);

var parameters = new CompilerParameters();

parameters.GenerateInMemory = true;

parameters.GenerateExecutable = false;

foreach (string reference in m_References)

{

parameters.ReferencedAssemblies.Add(reference);

}

//

var results = provider.CompileAssemblyFromSource(parameters,m_Source);

if (results.Errors.Count == 0)

{

var type = results.CompiledAssembly.GetType(TYPENAME);

if (type == null)

throw new Exception("type not found: " + TYPENAME);

object o = Activator.CreateInstance(type) as IKalkulation;

if(o == null)

throw new Exception("unable to createinstance");

m_kalkulation = o as IKalkulation;

//

if (m_kalkulation == null)

throw new Exception("unable to cast to IKalkulation");

}

return results;

}

That allows us take the dynamically generated class (that implements IKalkulation) and loop through each row in a featurelayer (or standalone table) performing the calculation using C# code entered into a form.

while ((row = cur.NextRow()) != null)

{

i++;

var obj = this.m_kalkulation.Kalkulate(row);

row.set_Value(idx,obj);

row.Store();

//System.Threading.Thread.Sleep(300); use for testing cancellation

int newPct = (int)((double)i * 100.0 / (double)total);

if (newPct != pct)

worker.ReportProgress(newPct);

pct = newPct;

if (this.CancellationPending)

break;

}

GIS: Where are the Languages at?

We spend a lot more time debating which general purpose language is better for GIS (C#, VB, Python, Java etc.) than we spend asking what GIS-specific languages should look like. Notice how the language choice is hidden by the compiler – it is an implementation detail. The job of the compiler is to convert human readable formal language expressions into machine language. In addition to general purpose languages like C#, VB.NET, etc., I think GIS deserves its own languages. Sure, we have the shape comparison language, but I think there is room for improvement. It should be possible to write codedom providers for things like rasters, topologies and networks.

Raster Languages

Esri once supported a product called ArcGRID which allowed several expressions (DOCELL, IF, and WHILE) that are no longer supported in Spatial Analyst. It would be possible for Esri to write a CodeDOM provider that would compile these expressions. Otherwise the same approach taken with the proof-of-concept could be adapted to work with pixels instead of IRows.

Topology Languages

Likewise, workstation ARC/INFO allowed one to easily find polylines where two polygons meet that have the same attribute – simply set up two RELATEs based on LPOLY# and RPOLY# and RESELECT the polylines based on expression where left and right attribute are not equal. In ArcMap this requires some tedious code. It seems like Esri could write a CodeDOM provider to allow topology rules to be used as search expressions and not just enforcement of business rules.

Network Languages

For geometric networks custom traceflow solvers are a very powerful tool. Imagine being able to utilize a language that made it easier to recursively build trees based on some network connectivity logic. Traversing the network using IForwardStar is just too tedious. A friendlier language is needed. Either a codeDOM provider or an IQueryable LINQ provider could support languages specifically geared towards network traversal – and would make things like ad hoc custom tracing easier.

Language is a virus. -William S. Burroughs

In other words, if your language doesn’t go viral, it won’t survive. With Stackexchange putting so many different carriers in close contact with one another, a new spatial language seems as inevitable as the next flu season.

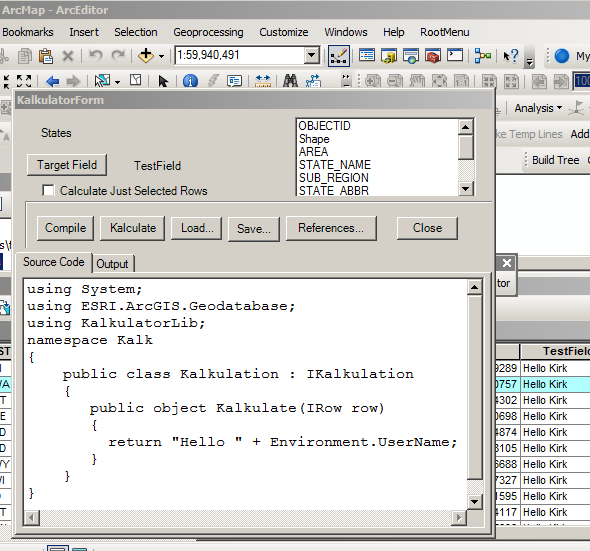

It’s all about the data

Very early in my GIS career, about 1995, my coworkers and I made a big map (3ft by 6ft at 1:250,000). It took several months and was the best we could do at the time. It was good, but, in my mind, not as good as I wished. It was printed by a 4 colour 300dpi printer, the lines were fuzzy, colours dithered, fills crosshatched, and paper speckled. The base data was comprised of several tiles, and the edge-matching was mediocre, the seams visible.

A decade and some later finds me working in an organization with deeper pockets, access to a high quality 2880x1440dpi printer with UV resistant inks (11 colours), seamless edge-matched data, and shaded relief images. A person wanders into my office and unfurled this raggedy old map. I immediately recognized it as the one we did in 1995. The corners were dog eared, full of pin holes, numerous tears adorned the edges, and wrinkled throughout. The colours had faded, the once blue rivers now a faint pink. It had been traveling from meeting to meeting, wall to wall, discussion to discussion, for 13 years and looked it.

She asked, hopefully yet resigned to a negative reply, if I could, perhaps, scan the map and print a new copy. (She had no idea I was one of the authors, her coming to our shop was just chance.) Excitedly I jumped at the chance to furnish her with a new map of the same area, made just the year before, but with much higher production value. Richer, deeper, more varied colours. Fine, crisp lines. Smooth typography, following the curve of watercourses, legible even at 6pts. No edge-matching seams. Shaded relief background smoothly blended with vegetated areas and ice fields. Finally I could fill that lack from long ago, with flourish!

She eyed the proffered replacement a time, and then politely declined. Yes it was beautiful, she said, but not as good as the old one. I was shocked. I’m sure my mouth hung open. You see, one thing we’d done all those years before, with equipment that had less computing power than today’s phones, was add place names. Lots and lots of place names, based on local knowledge. Oh. Our new maps don’t have those.

The end result? We don’t have a map-size scanner, so I carefully taped all the rips and tears on “old faithful”, double taped the edges, tripled the corners, and sent it and her back on their rounds. The old thing is probably still being used now.

A predecessor once told me, “It’s all about the data. All that other stuff is just fluff that comes and goes”. At the time he was referring to software, but this story makes it clear it applies to other technology like computers and printers as well. I didn’t really get it then, but I’m closer to understanding what he means now.

(The images are representative, they’re not the actual maps in the story. Hat tip to Bruce Mackenzie then of Ministry of Environment, Lands & Parks, BC.)